MEGA Vision: Integrating Reef Photogrammetry Data into Immersive Mixed Reality Experiences

Published June 11th 2024

This study covers how the MEGA Vision mixed reality application brings the underwater world to life by combining high-resolution photogrammetry with augmented reality. This immersive platform allows users to explore realistic 3D reconstructions of coral reefs and submerged cultural heritage sites, offering an engaging and educational experience. Developed with Unity and Vuforia, the app fosters public understanding of marine ecosystems and maritime history, bridging the gap between scientific research and conservation through accessible and interactive technology.

A Comparison of the Diagnostic Accuracy of in-situ and Digital Image-Based Assessments of Coral Health and Disease

Published May 5th 2020

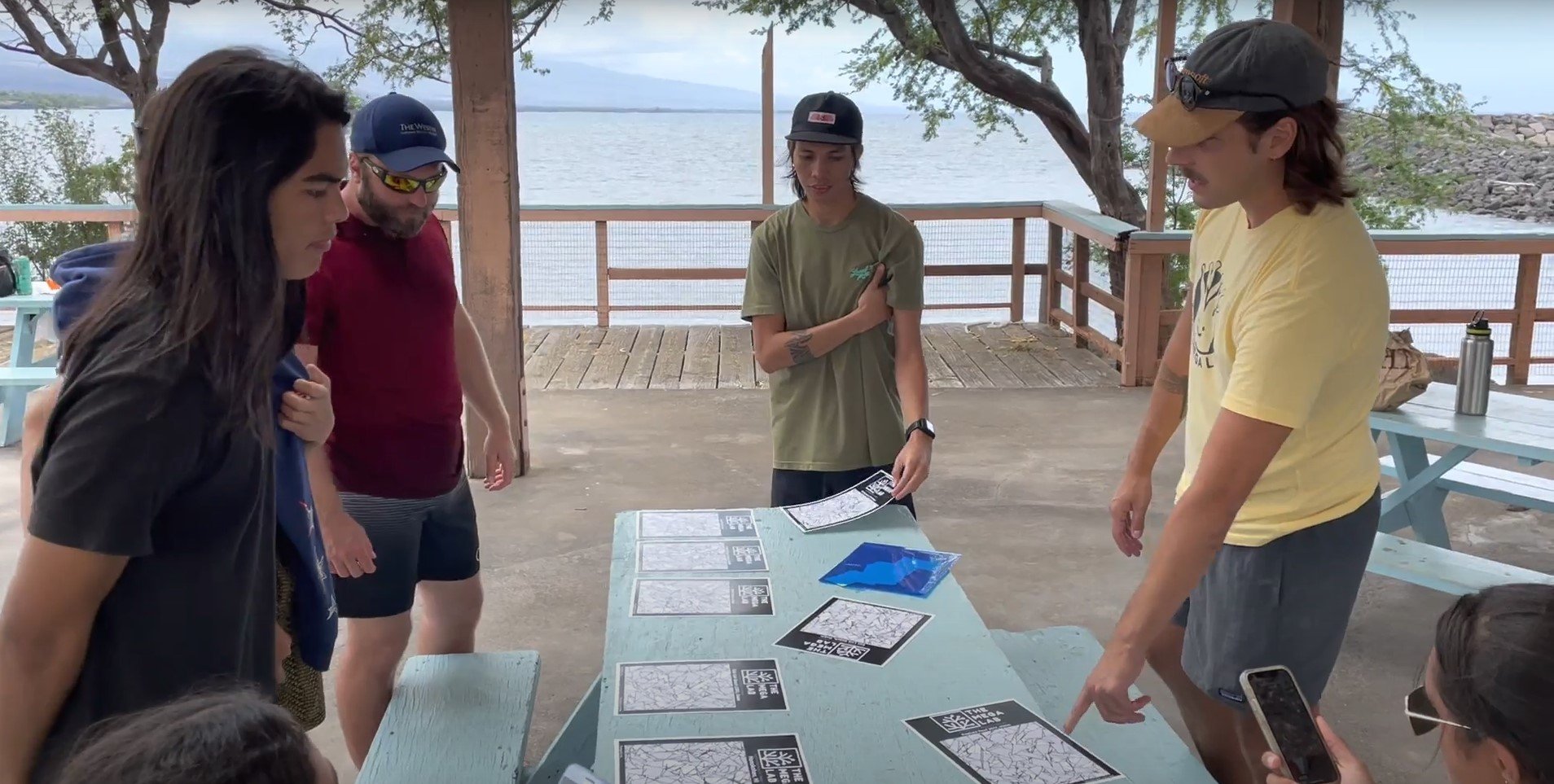

This study compares in-situ visual surveys and digital image-based analyses for assessing coral health on reefs around Hawaii Island. It finds that in-situ methods are more sensitive (better at identifying true cases of disease) but less specific (prone to false positives) compared to digital methods using high-resolution orthomosaics. Despite these differences, both approaches are similarly accurate and viable for monitoring coral health, addressing the growing need for effective disease detection as coral diseases become more prevalent globally.

Balancing Human and Machine Performance When Analyzing Image Cover

Published April 20th 2020

This study explores how annotation styles in computer vision impact both human effort and machine learning performance, particularly in image cover applications, such as estimating the percentage of an image covered by specific objects. By comparing different annotation methods, the research reveals that the most accurate human annotations do not always yield the best machine performance. Instead, optimizing the annotation format can balance accuracy and efficiency, enabling strong machine learning outcomes with minimal human effort.